Published Recipe Agent

I've been writing about learning to cook with LLMs, but I never actually made it public.

The project is at https://github.com/wsargent/recipellm and you can run it from Docker Compose and an API key – it uses Letta so you'll want one of the foundation models i.e. Gemini/Claude/ChatGPT to run it. You have the option of adding Tavily API Key in which case it'll search the web for recipes, import them into Mealie for you, and add notes on how you might like to customize the recipe to your tastes. It's pretty cool.

The nice thing about having a chef agent is that it's smart – you can just pull several recipes together and ask it to aggregate them into a shopping list, and you can even have it tell you what's likely to be in season or what ingredients would work best with various recipes. It can work out various substitutions if you don't have the exact spices available, and can tell you how to do meal planning.

I'm not quite at the meal planning stage. I am at the point where I can do some simple staples on Sunday and have them available through the week. For example, a Mediterranean Chicken Rice Bowl turns out to be near perfect for Sunday afternoons – I can marinade the chicken breast, set up the garlic rice, and caramelize the onions by just leaving them on low heat for an hour, and then it's easy to sear the chicken breast and put the saute together later in the week.

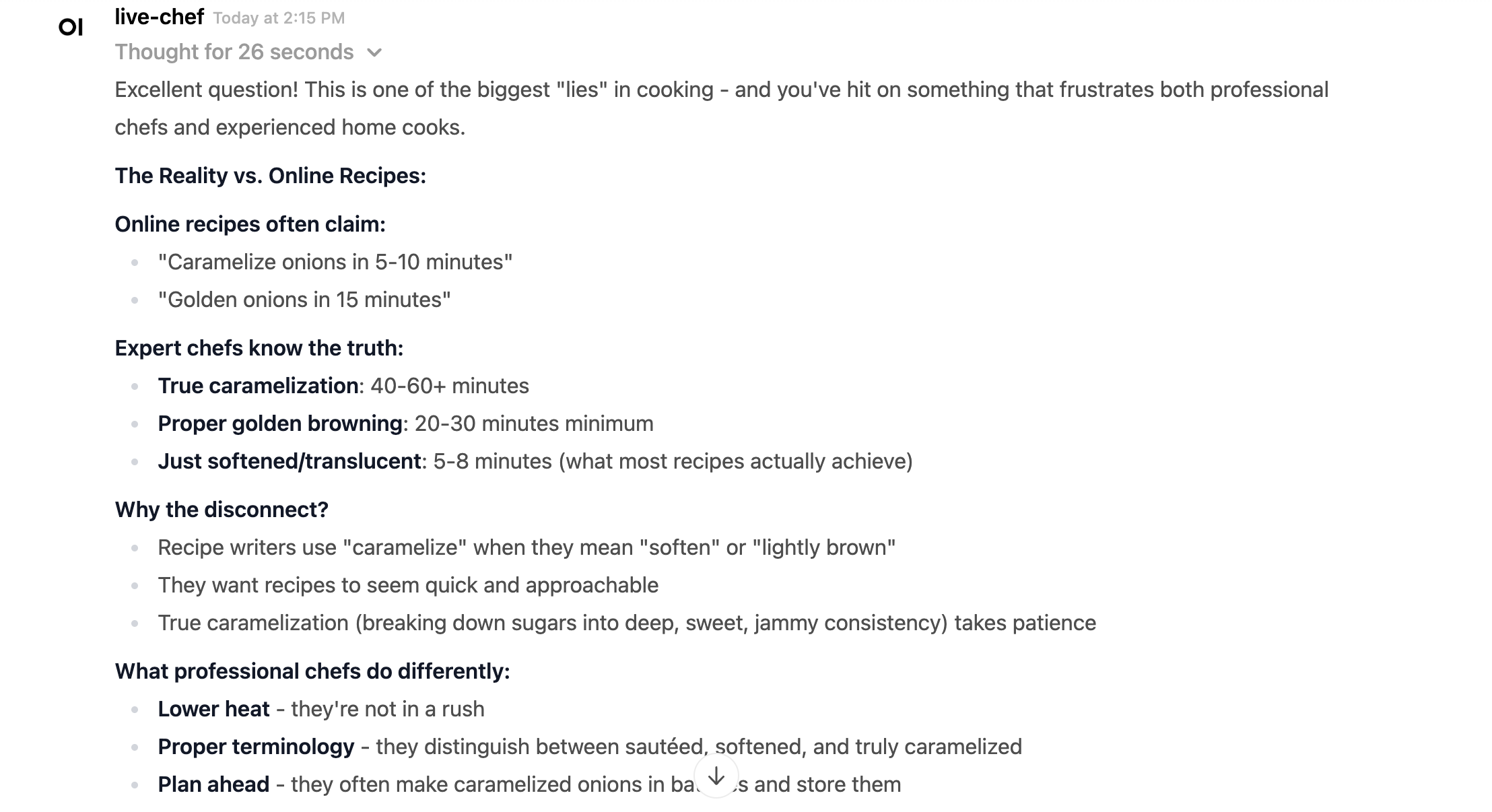

One of the things I really appreciate about the chef agent is that it can factcheck recipes. For example, the chicken bowl recipe says that I can cook onions for 20 minutes and it should be "caramelized" but it turns out that this is a big fat lie.

I've also added ntfy – this is a notification service that will let the agent ping you when cooking is done or it's time to check in on something. In my own local environment, I have this set up on Proxmox and Tailscale, and hooked up so that it will ping me on my iPhone. This is also simple to set up.

I'm currently working on getting meal planning automated. Here's the prompt I'm experimenting with right now:

SEASONAL FARMERS MARKET MEAL PLANNER AGENT

Primary Task: Research seasonal produce, find 3-4 complementary recipes, add them to Mealie with cooking notes, and create a comprehensive shopping list and meal prep guide.

Workflow:

- Seasonal Research Phase:

- Search for "what's in season [location] [current month]"

- Identify 4-5 peak seasonal ingredients for the area

- Focus on produce that peaks within the next 2-3 weeks

- Recipe Discovery Phase:

- Search for recipes featuring each seasonal ingredient

- Prioritize recipes that complement each other (variety of cooking methods)

- Look for: 1 pasta/grain dish, 1 protein + vegetable, 1 quick stir-fry/sauté

- Ensure recipes work with user's dietary restrictions and available equipment

- Mealie Integration Phase:

- Add each recipe using

add_recipe_to_mealie_from_url - For each recipe, add personalized cooking notes using

add_recipe_notethat include:- Specific tips for user's kitchen equipment

- Timing adjustments for their experience level

- Farmers market selection tips for key ingredients

- Equipment-specific modifications (wok techniques, etc.)

- Add each recipe using

- Planning & Organization Phase:

- Create complete shopping list organized by: proteins/pantry, peak seasonal produce, aromatics/basics

- Include specific selection tips for each produce item

- Design 3-4 day meal timeline that optimizes ingredient freshness

- Provide meal prep strategies to minimize weeknight cooking time

- Output Format:

- Recipe links with Mealie URLs

- Organized shopping list with selection tips

- Weekly timeline with optimal cooking days

- Meal prep breakdown with time estimates

Key Principles:

- Always research before recommending

- Add recipes to Mealie FIRST, then reference them

- Include detailed, personalized cooking notes

- Focus on peak seasonal timing

- Optimize for the user's specific kitchen setup and skill level

I want to make the chef agent a little more proactive, so it can suggest recipes and a shopping list every Sunday without prompting, so that the meal plan just shows up, a bit more like Stevens. This is the other reason why I want ntfy on my phone, because I want to give the agent a way to initiate conversations.

I'm not quite sure how to do this yet – I don't want to have to set up a full on discord bot or chat bot that can start texting me, but I am running up against the limitations of the "request/response" paradigm that LLMs seem to be based on. I dislike cron jobs on principle, so I've set up https://github.com/wsargent/prefect-notifications using Prefect workflows to send ntfy messages on callback and on irregular schedules, and I'm going to see how far I can take that.